Audio Presentation (by NotebookLM)

Overview

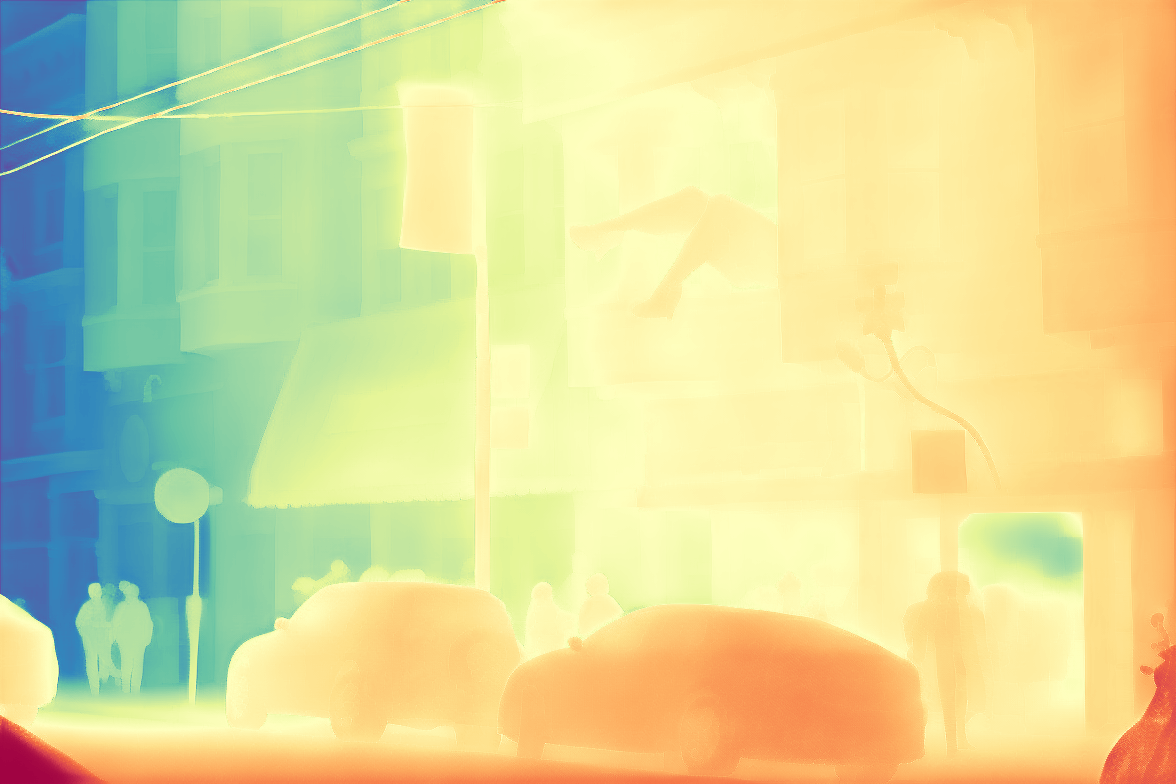

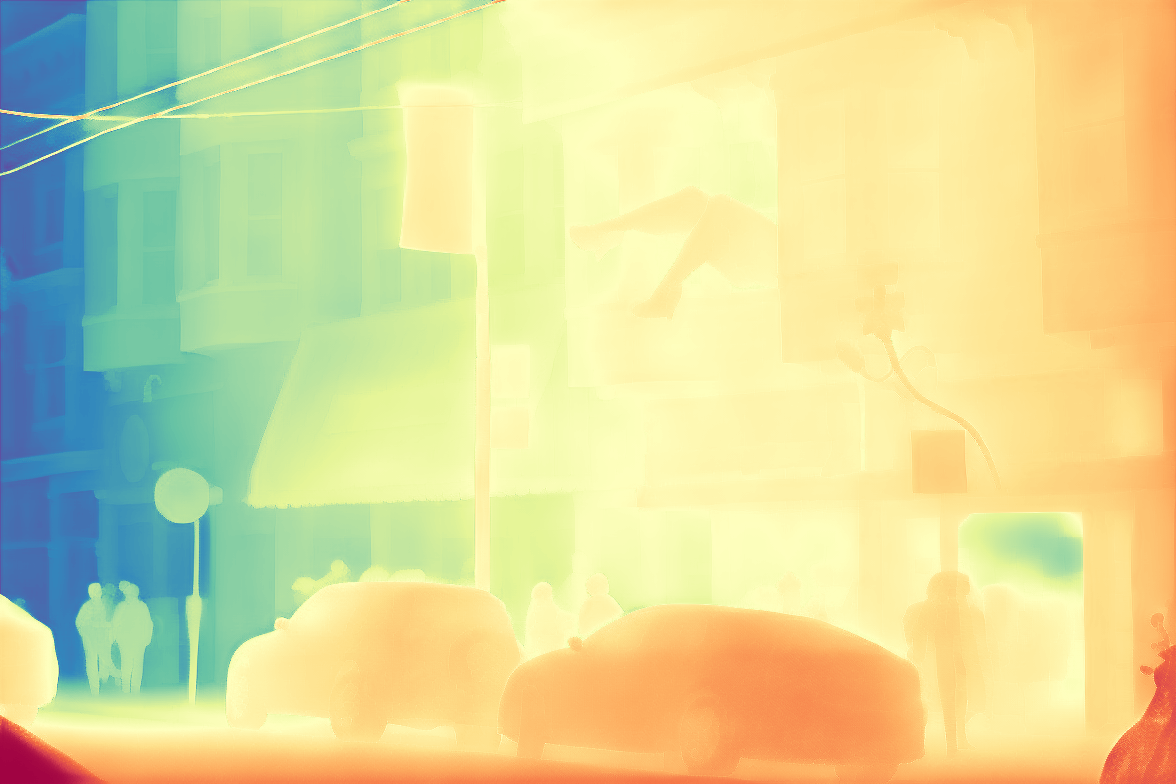

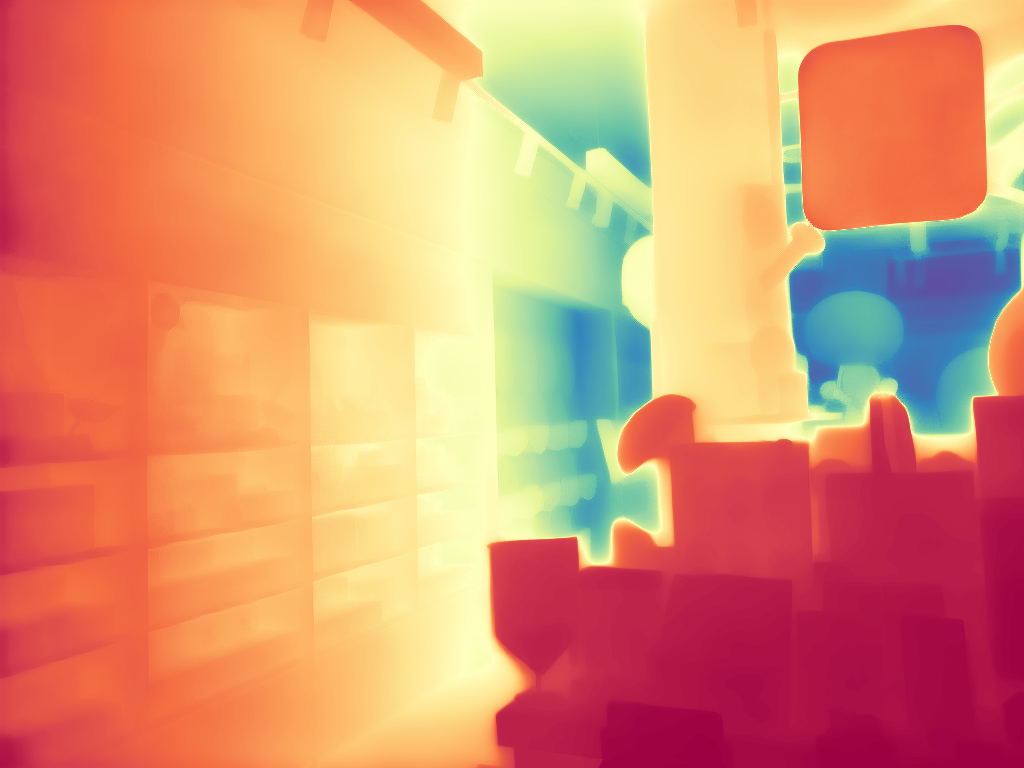

We present SharpDepth, a diffusion-based depth model for refining metric depth estimators, e.g., UniDepth, without relying on ground-truth depth data. Our method can recover sharp details in thin structures and improve overall point cloud quality.

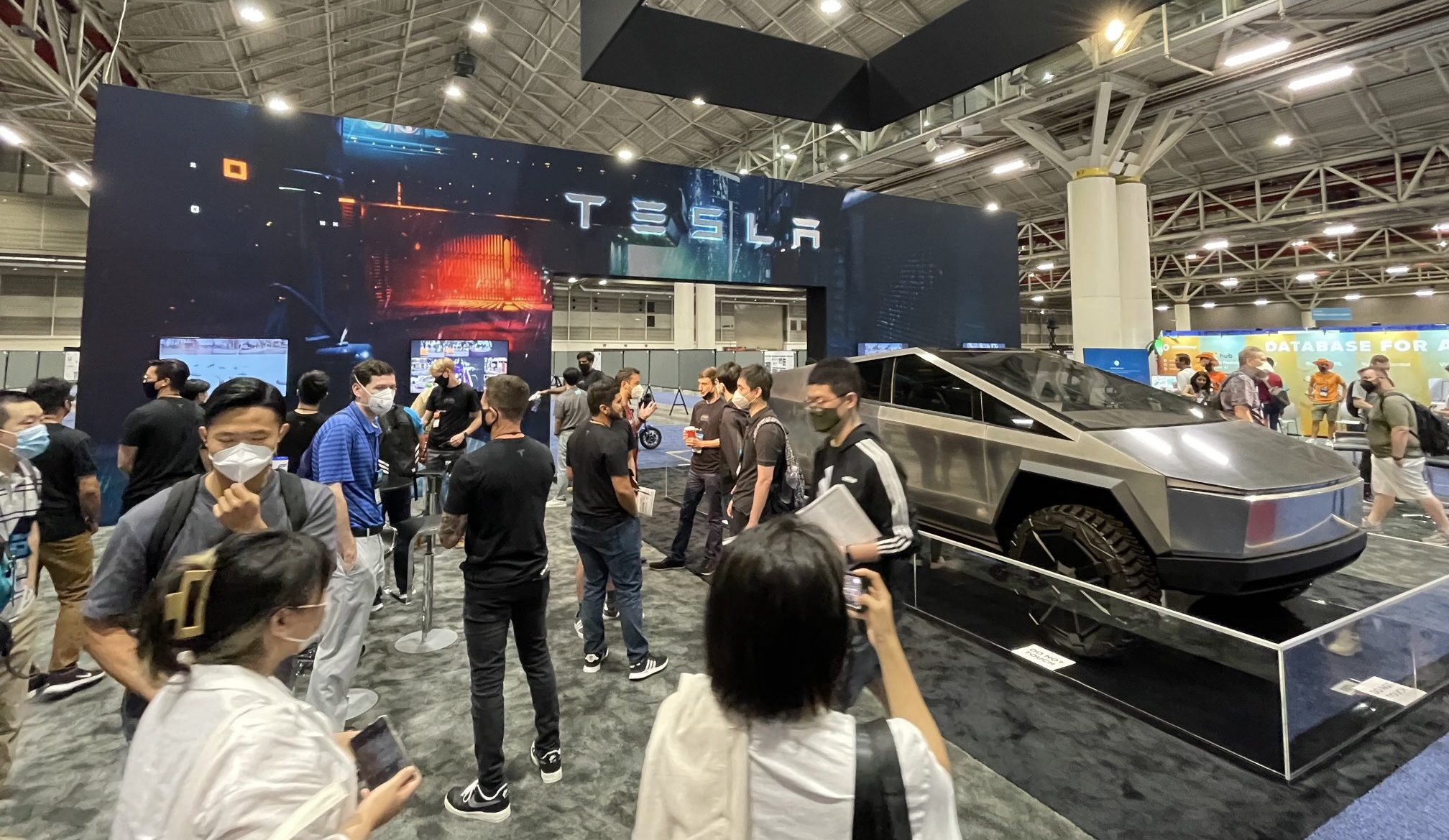

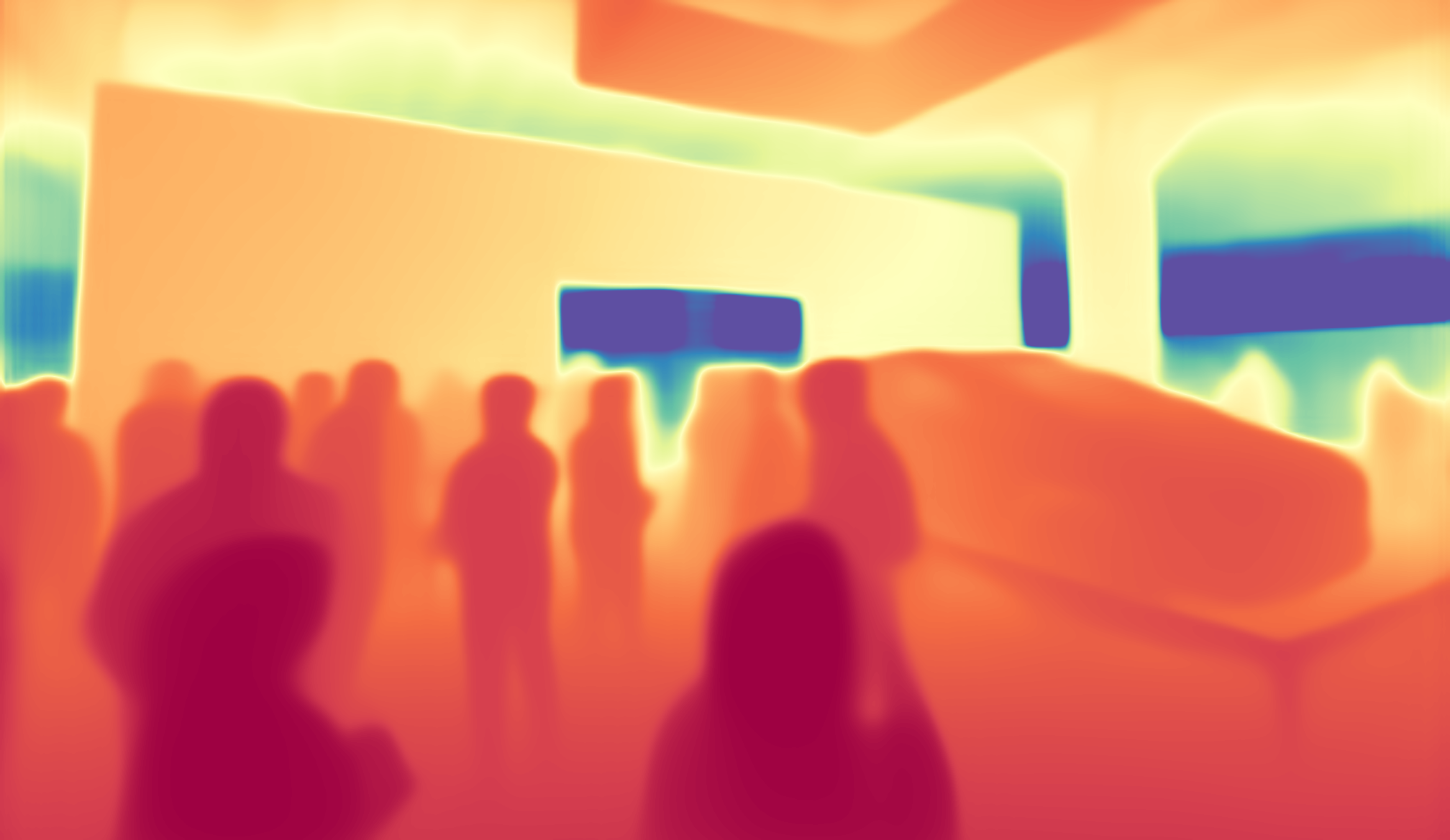

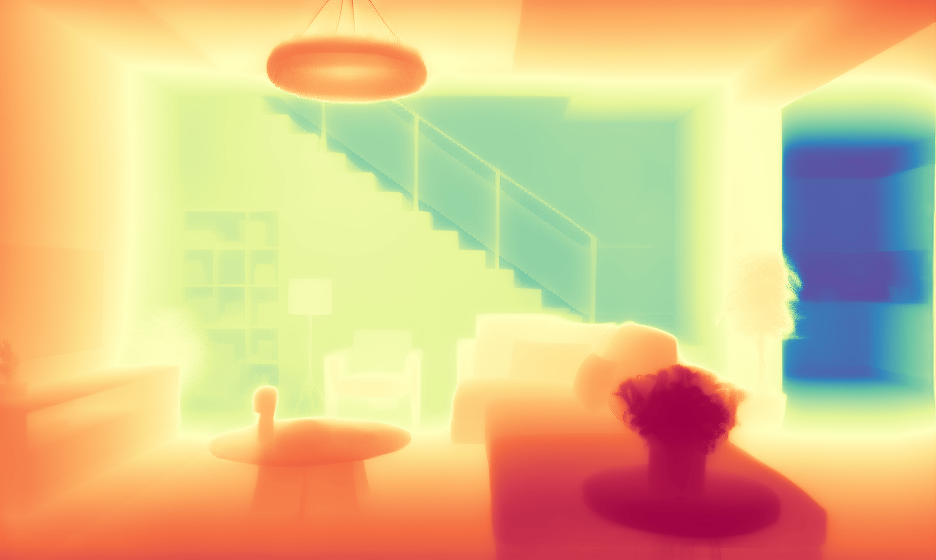

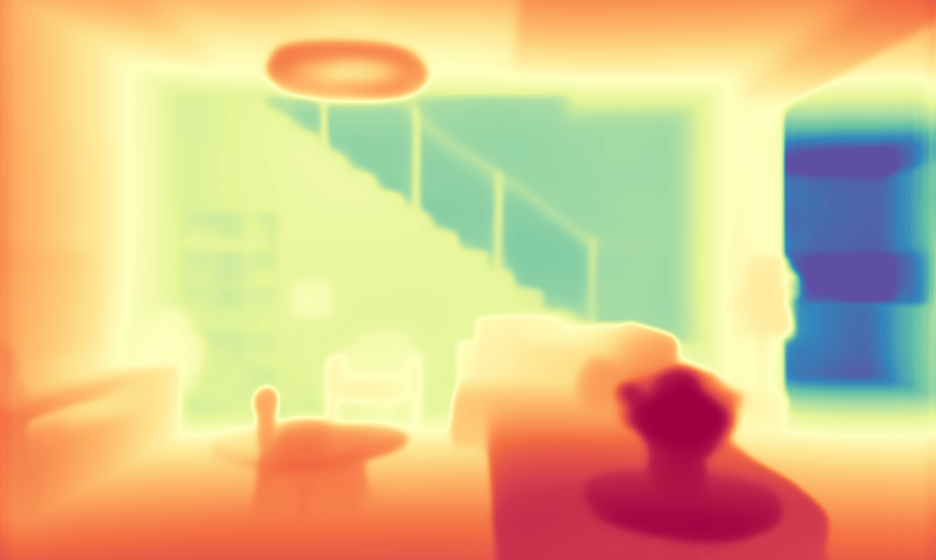

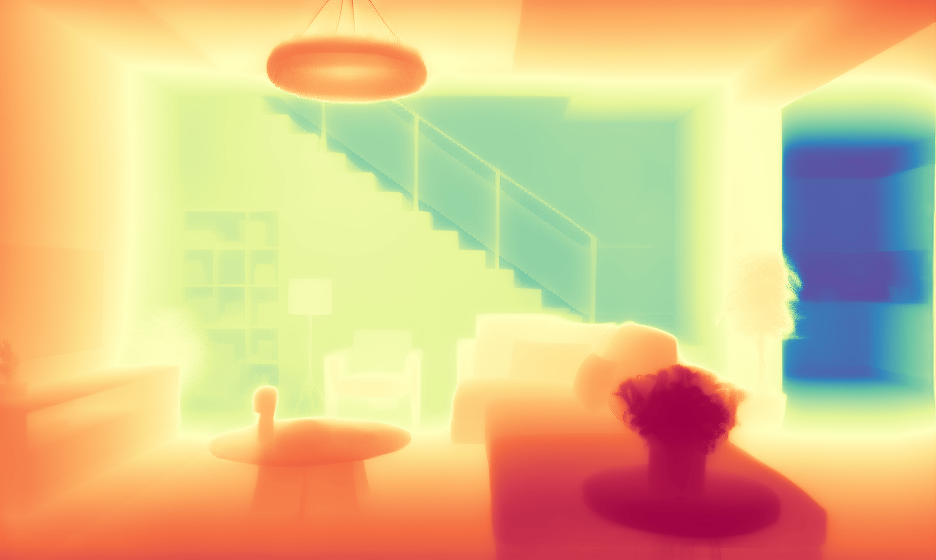

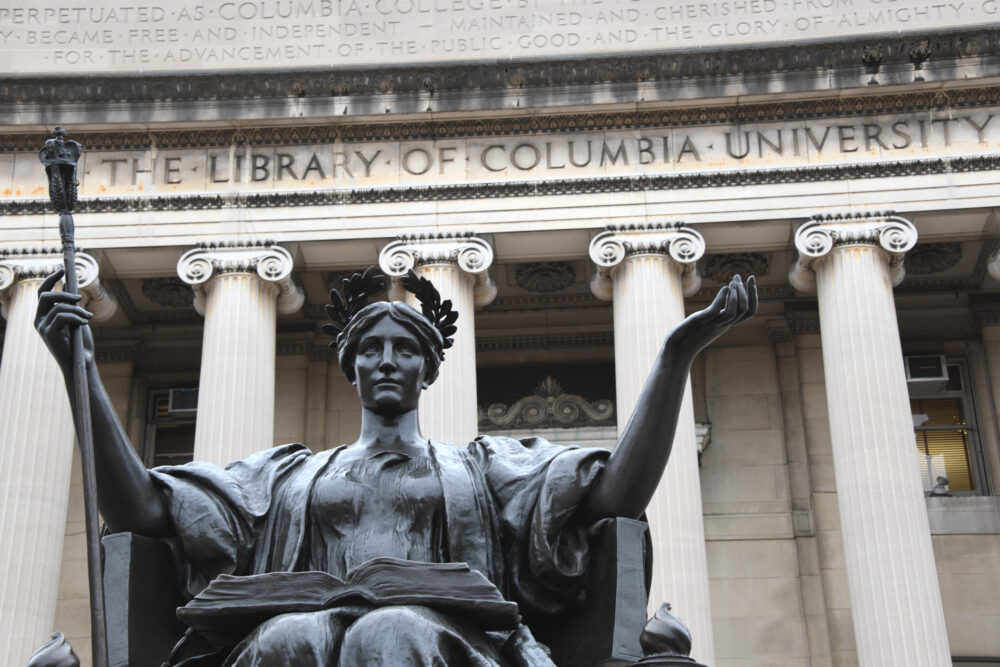

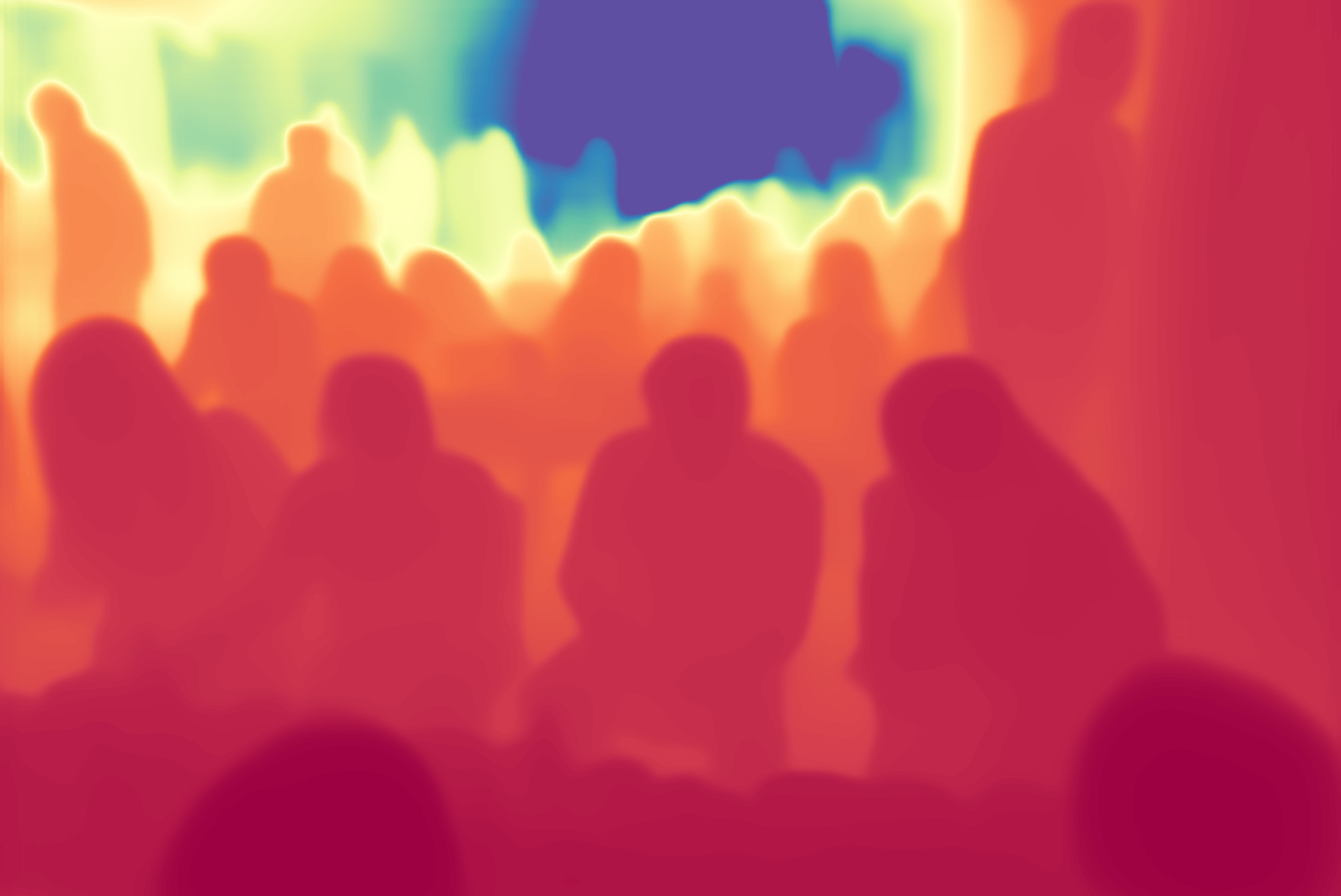

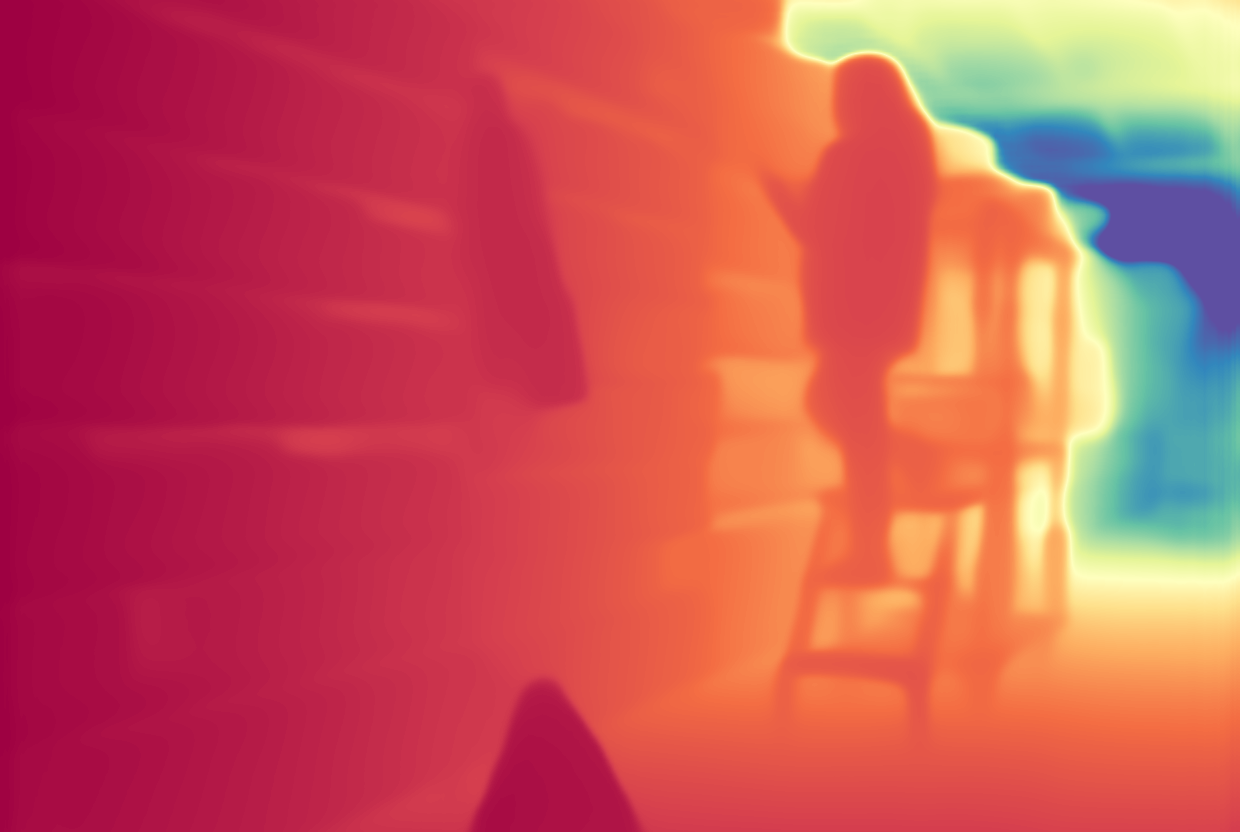

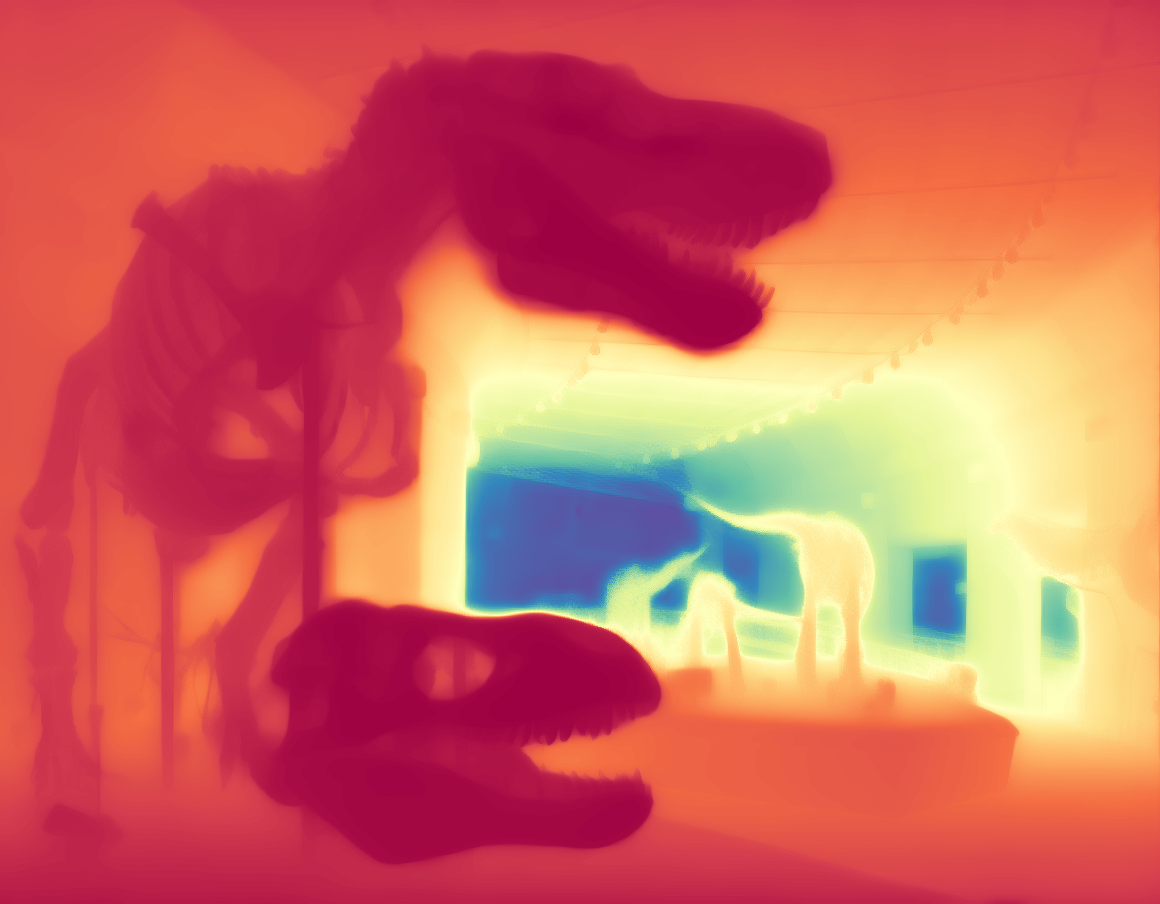

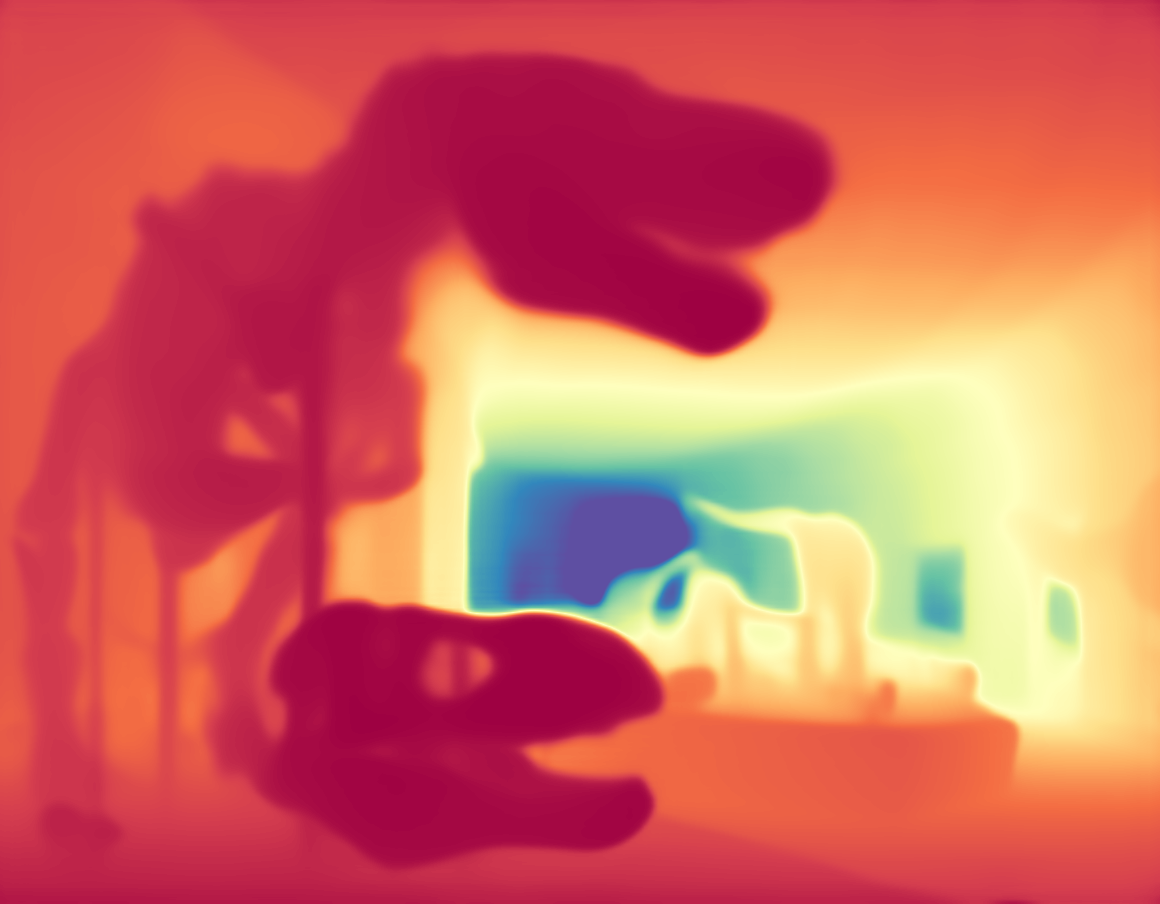

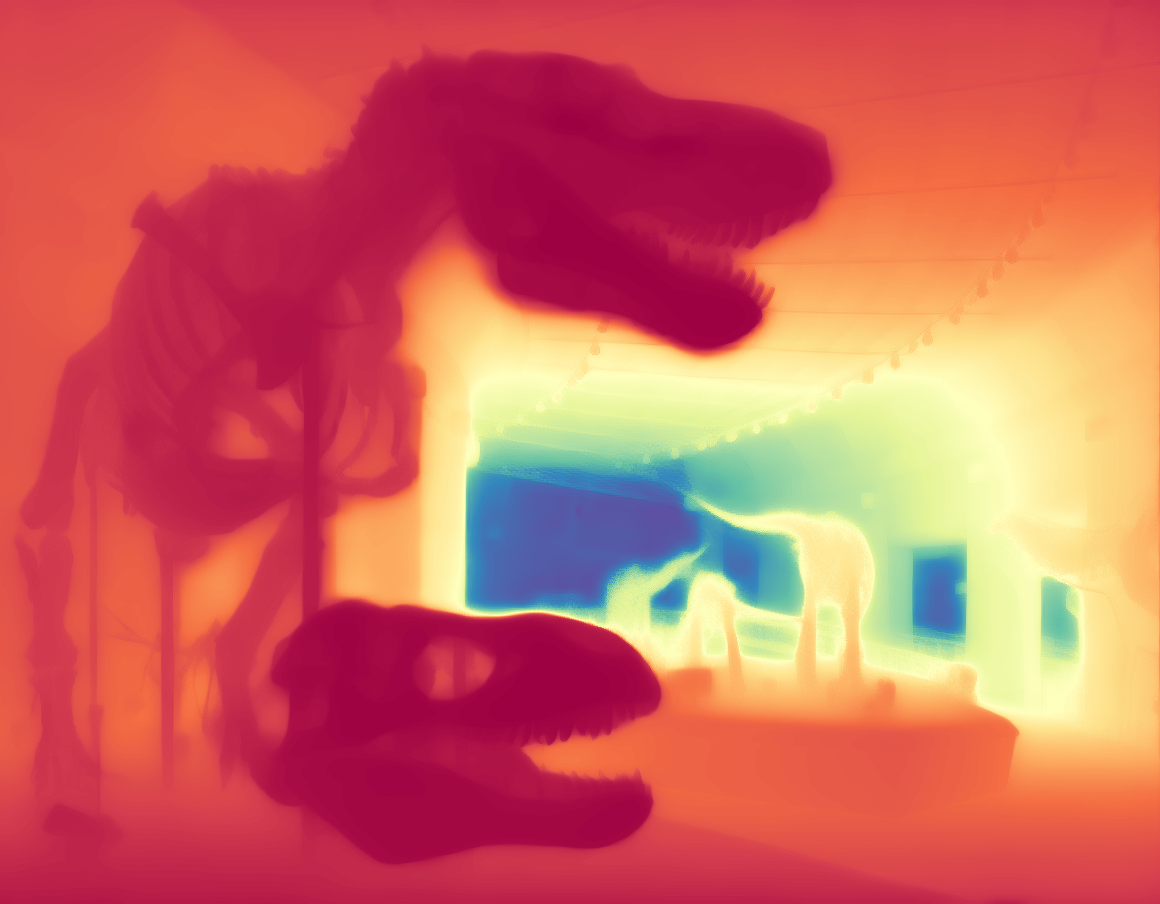

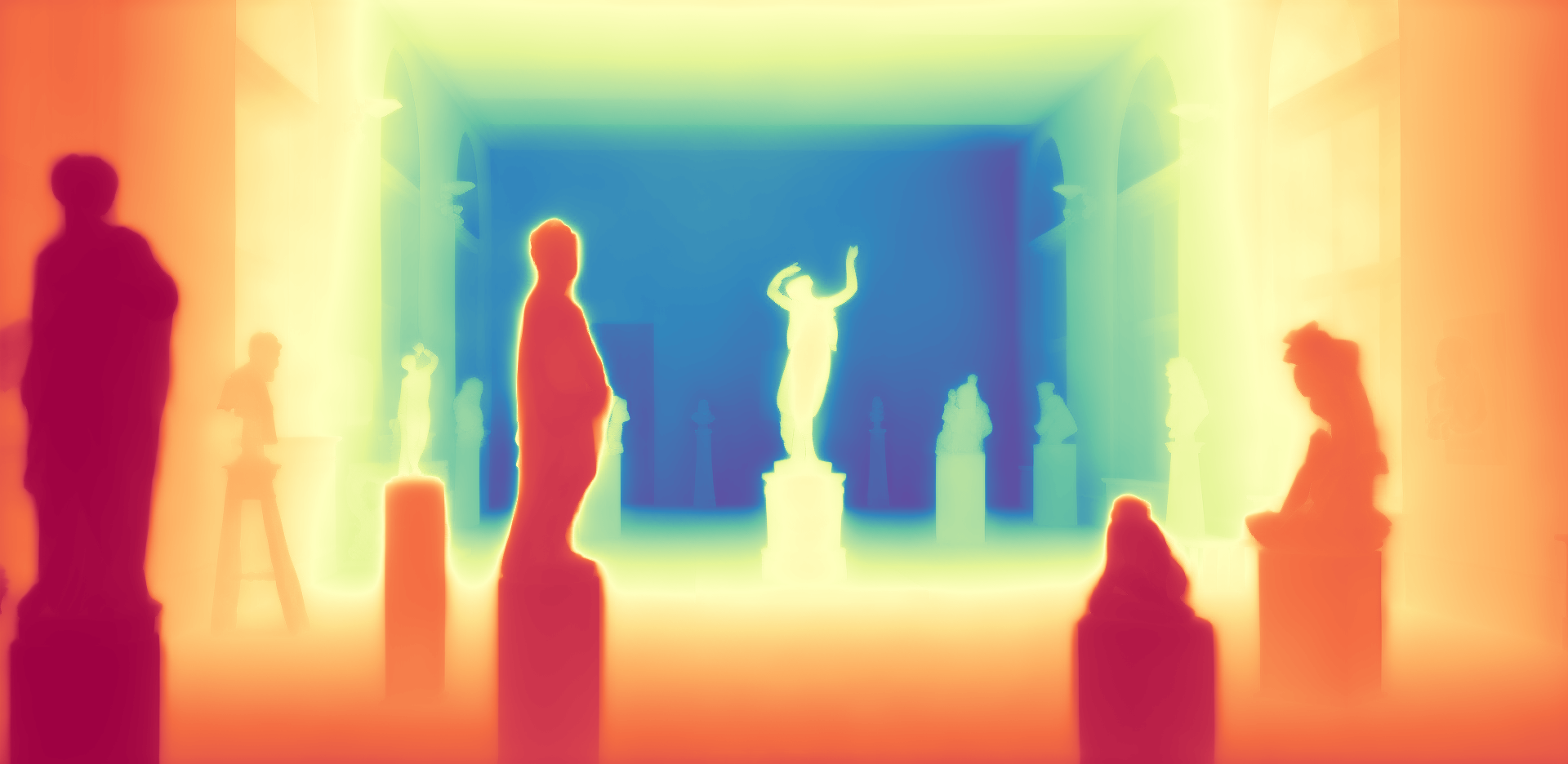

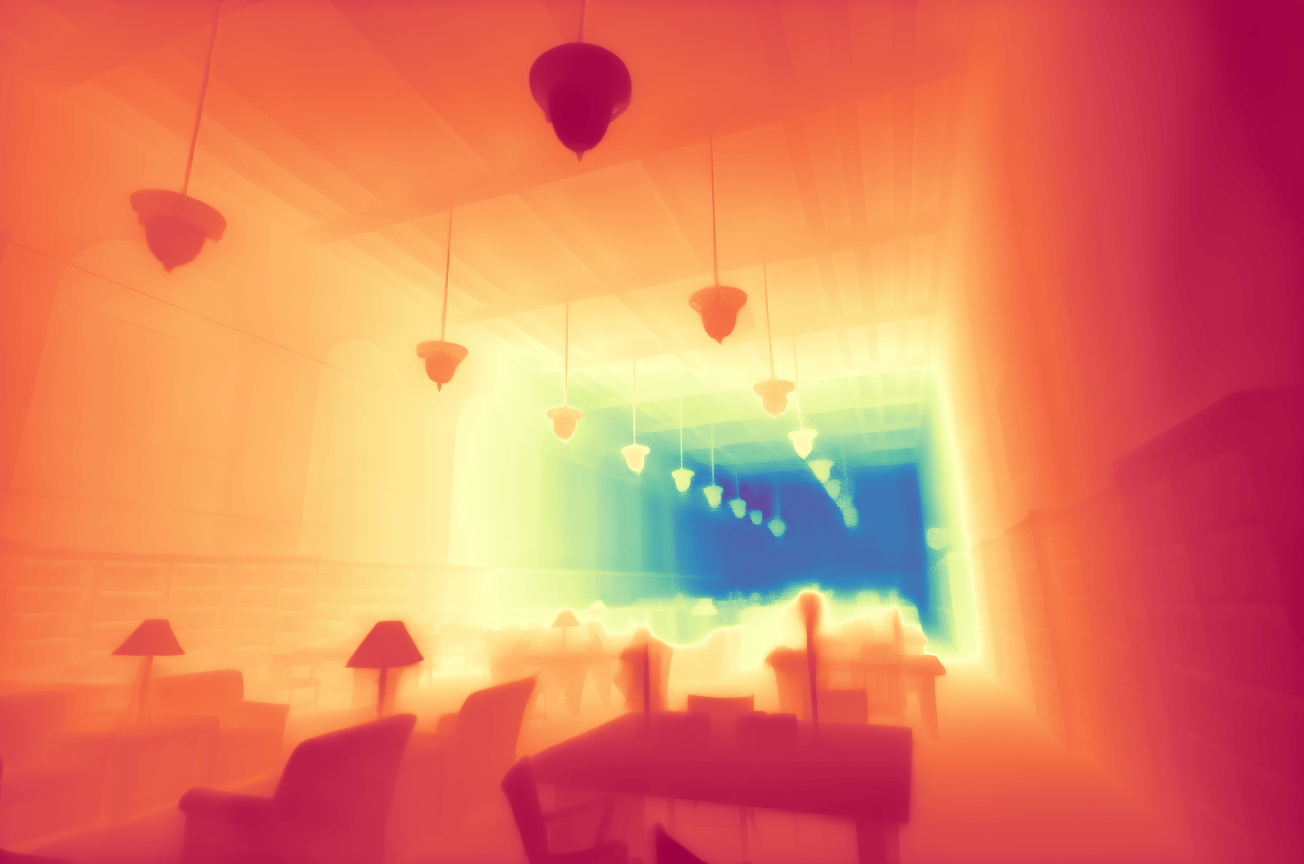

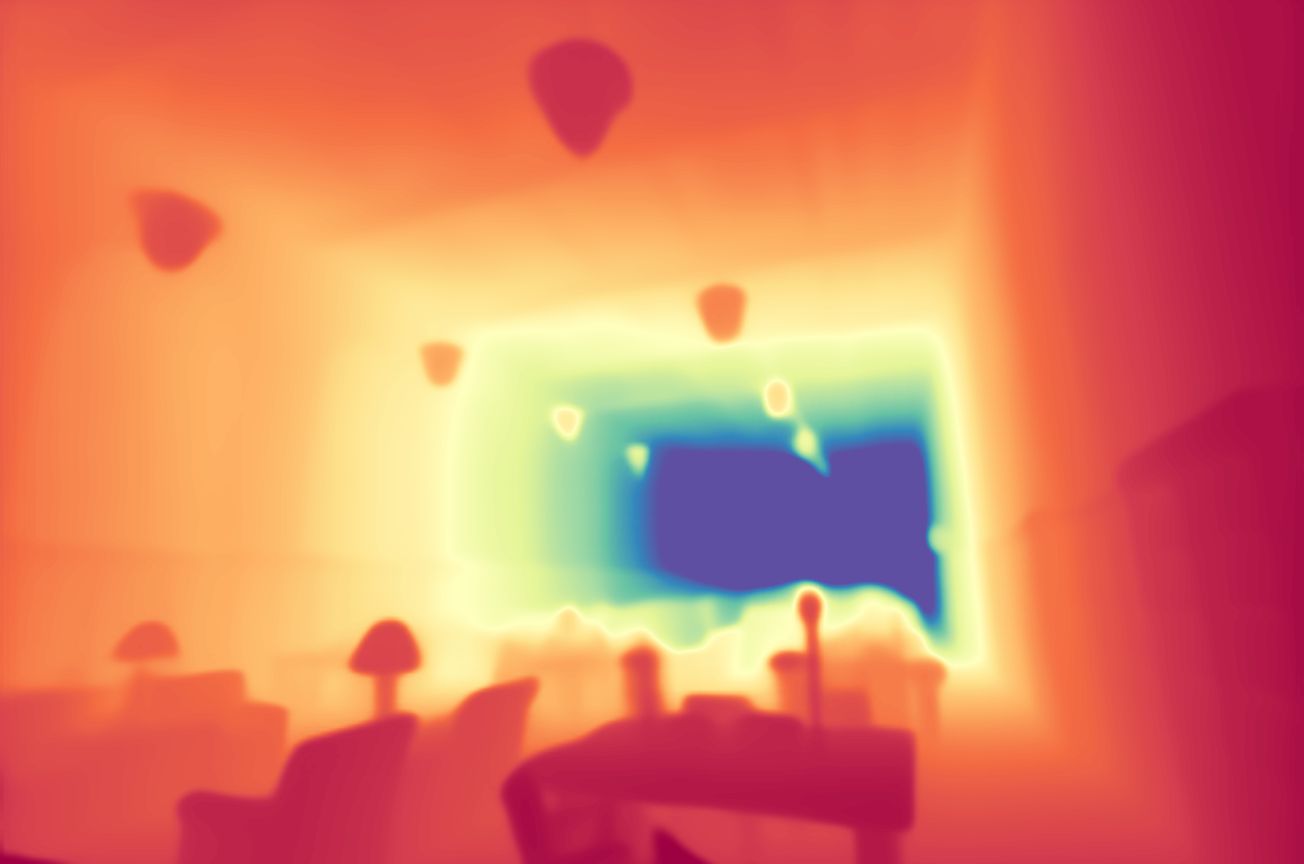

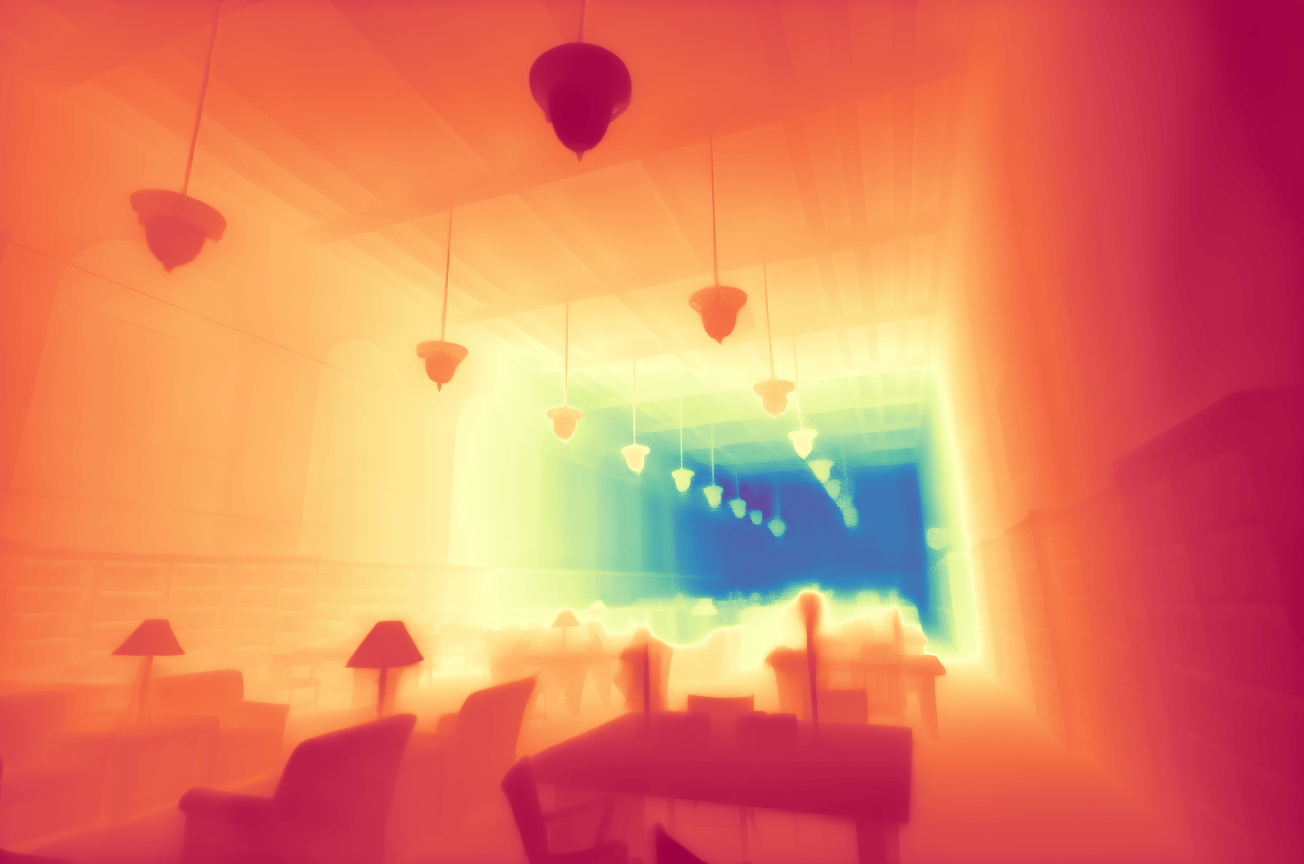

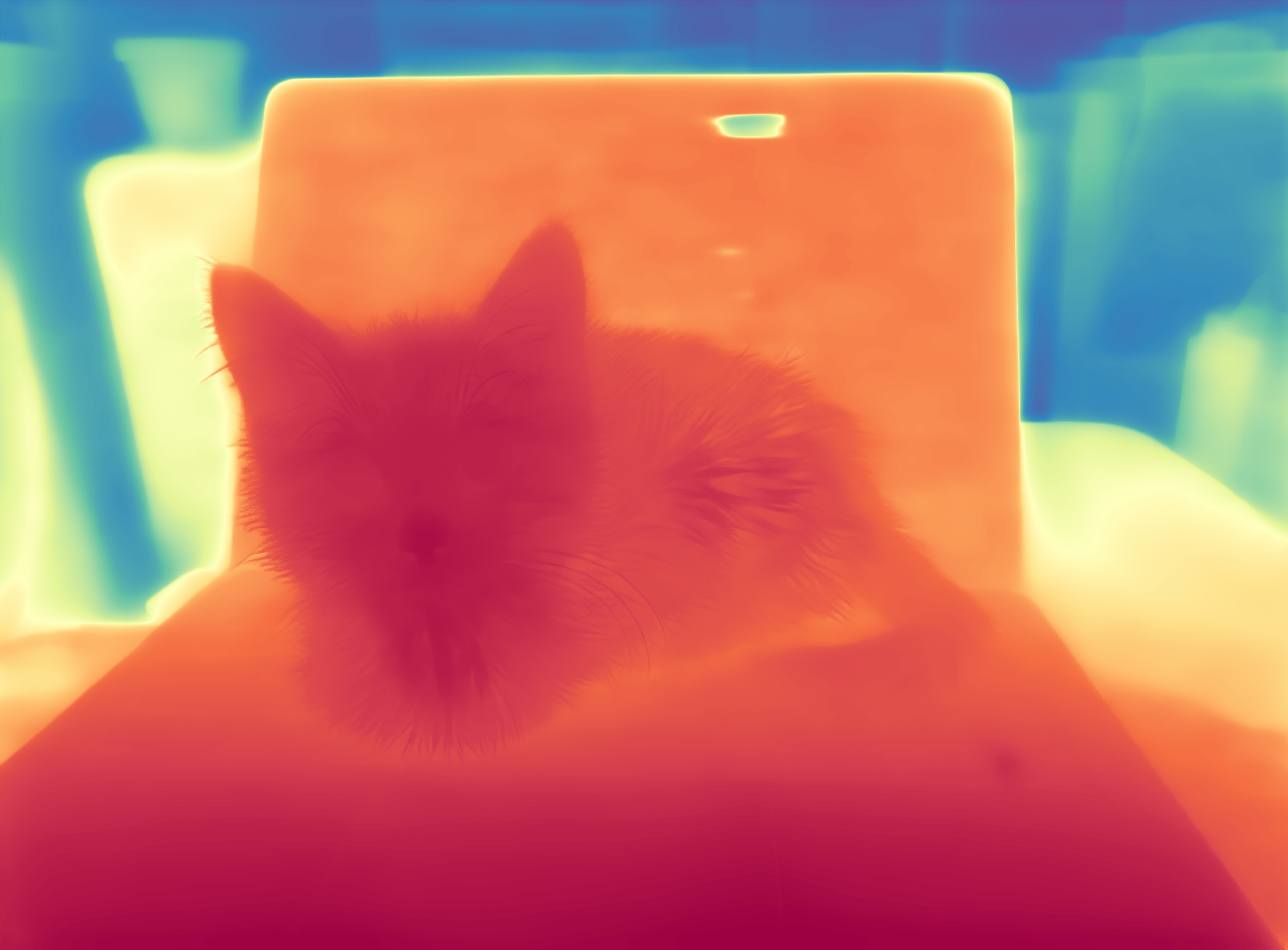

The gallery below presents several images from the internet and a comparison of SharpDepth with the previous state-of-the-art metric depth like UniDepth. Use the slider and gestures to reveal details on both sides.

Gallery

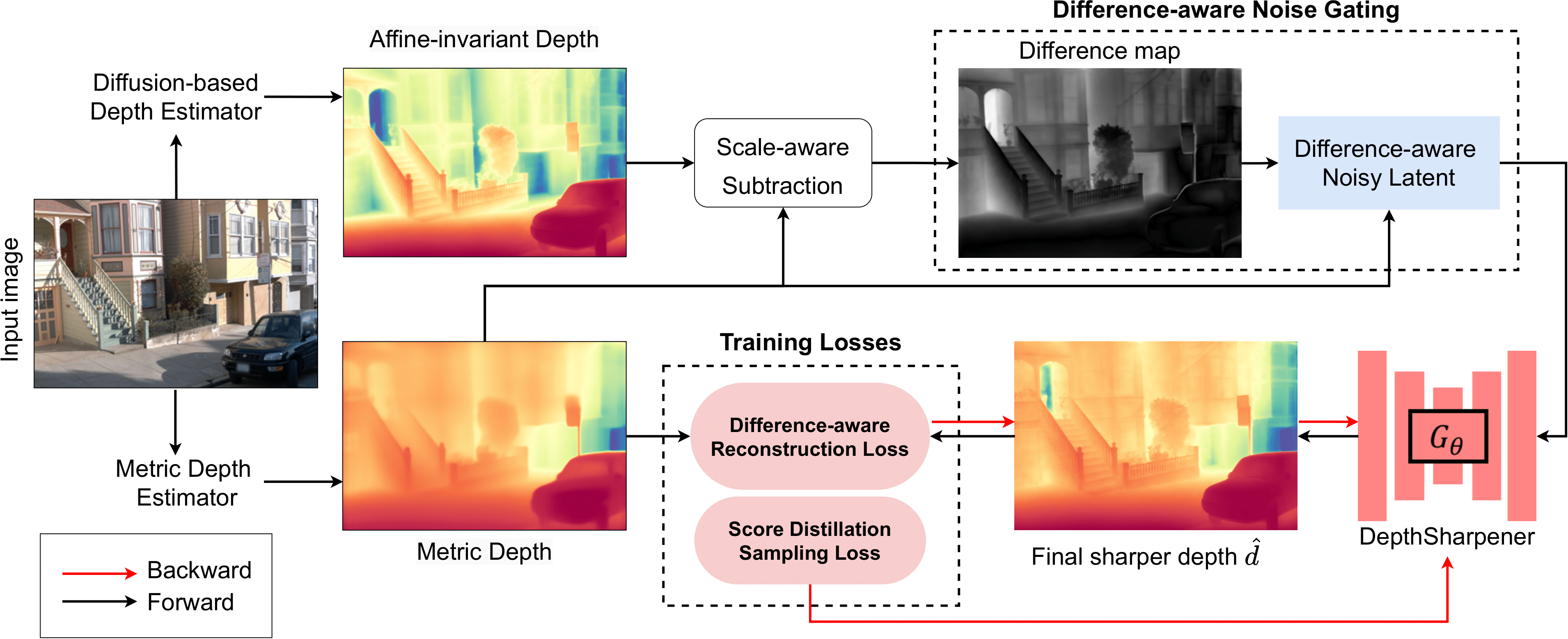

How it works

Fine-tuning protocol

$\newcommand{\img}{\mI} \newcommand{\depth}{\mathbf{d}} \newcommand{\latent}{z_{i}} \newcommand{\latentdepth}{z_{d}} \newcommand{\discrilatentdepth}{z^{\tilde{d}_{\text{norm}}}} \newcommand{\discridepth}{d} \newcommand{\genedepth}{\tilde{d}} \newcommand{\discridepthmetric}{\tilde{d}_{\text{metric}}} \newcommand{\blendedlatent}{z^\prime_{d}} \newcommand{\metriclatent}{z_{d}} \newcommand{\predlatentdepth}{\hat{z}} \newcommand{\initlatentdepth}{z^{\hat{d}_{\text{init-norm}}}} \newcommand{\preddepth}{\hat{d}} \newcommand{\initdepth}{d_{\text{init}}} \newcommand{\latentimage}{z^{\mI}} \newcommand{\noise}{\bm{\epsilon}} \newcommand{\denoiser}{\bm{\epsilon}_{\theta}} \newcommand{\denoiserlong}{\denoiser(\latentdepth_t, \latentimage, t)} \newcommand{\catinput}{\mathbf{z}} \newcommand{\encoder}{\mathcal{E}} \newcommand{\decoder}{\mathcal{D}} \newcommand{\discri}{f_D} \newcommand{\genera}{f_G} \newcommand{\refiner}{G_\theta} \newcommand{\refinerema}{G_{\bar{\theta}}} $ Given an input image $I$, we first use both pre-trained metric depth model $f_D$ and diffusion-based depth model $\genera$ to produce metric and affine-invariant depth output $\discridepth$ and $\genedepth$, respectively. Our goal is to generate a sharpened metric depth map, $\preddepth$, using our proposed sharpening model, $\refiner$. This model architecture is based on state-of-the-art pre-trained depth diffusion models. Instead of naively relying on the forward process of diffusion model, we introduce a Noise-aware Gating mechanism, which provides explicit guidance to the sharpener $\refiner$ on uncertain regions. To enable ground-truth free fine-tuning, we use SDS loss to distill fine-grained details from the pretrained diffusion depth model $\genera$ and Noise-Aware Reconstruction Loss to ensure accurate metric prediction.

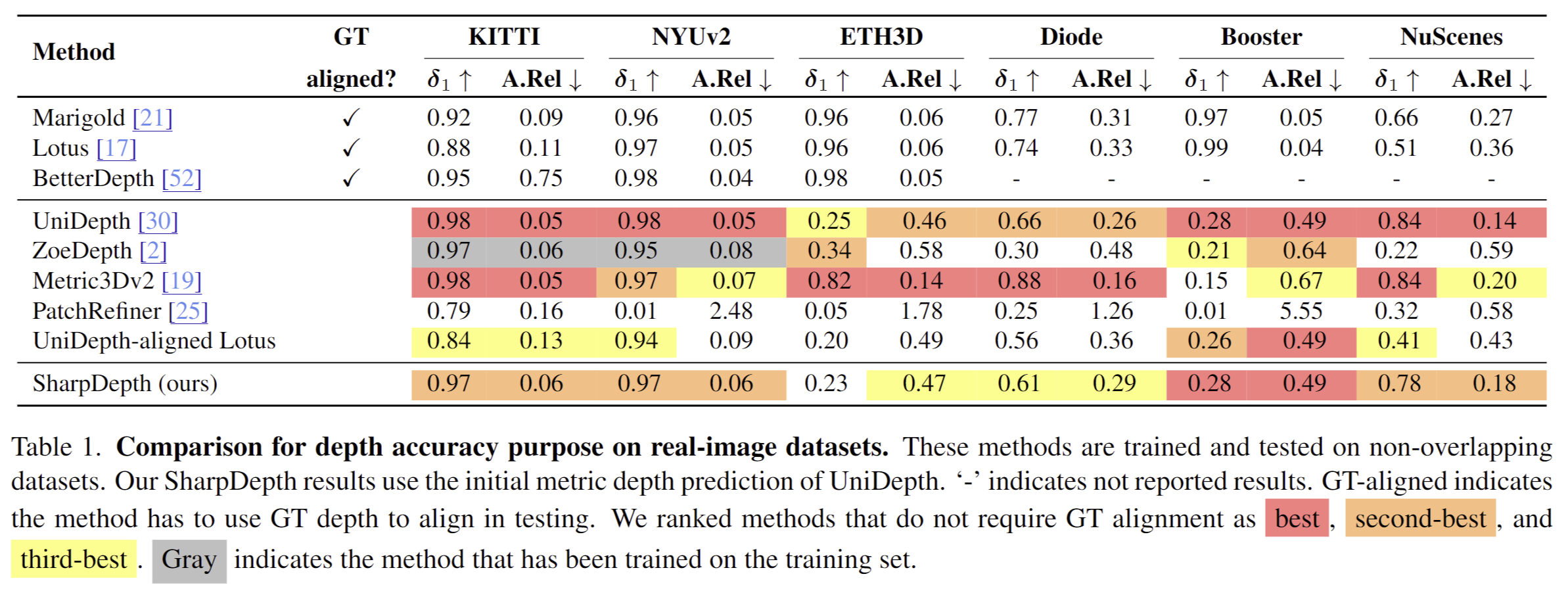

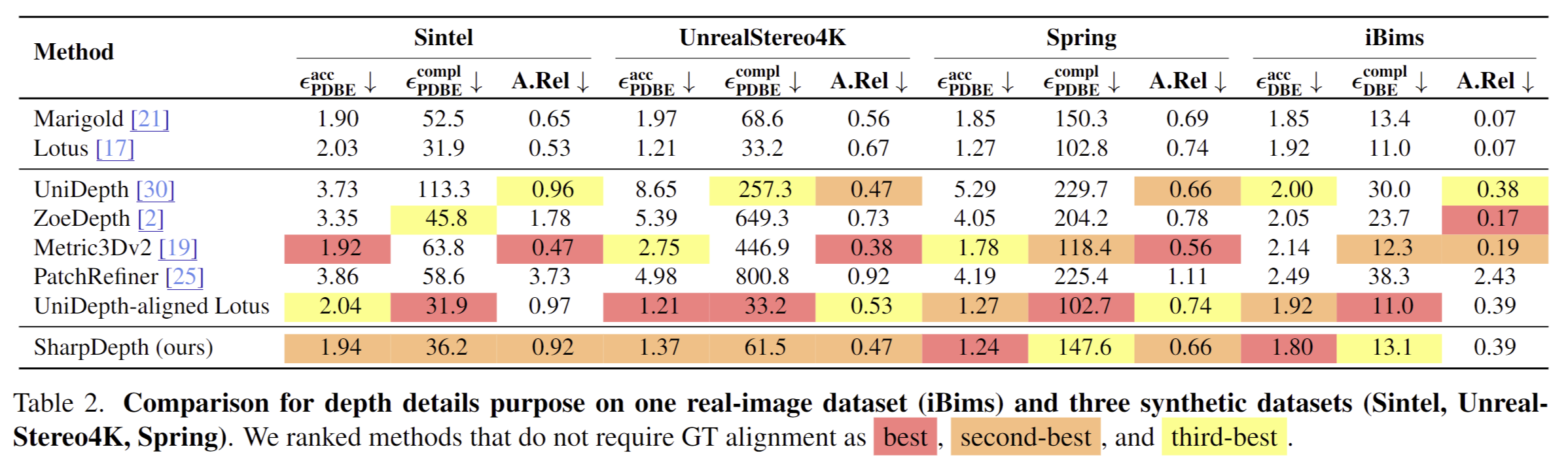

Comparison with other methods

Quantitative comparison of SharpDepth with SOTA metric depth estimators on several zero-shot benchmarks. Our method achieves accuracy comparable to metric depth models. Further evaluation on synthetic datasets (Sintel, UnrealStereo, and Spring) and a real dataset (iBims) shows that our method significantly outperforms UniDepth in both edge accuracy and completeness. By leveraging priors from the pre-trained diffusion model, our approach produces sharper depth discontinuities and achieves high accuracy across datasets. In contrast, discriminative-based methods often produce overly smooth edges, leading to higher completeness errors.

Citation

@article{pham2024sharpdepth,

title={SharpDepth: Sharpening Metric Depth Predictions Using Diffusion Distillation},

author={Pham, Duc-Hai and Do, Tung and Nguyen, Phong and Hua, Binh-Son and Nguyen, Khoi and Nguyen, Rang},

journal={arXiv preprint arXiv:2411.18229},

year={2024}

}